Summary

Opsmorph has been preparing data lake patterns for cloud storage of data sets of varied sizes and formats that can help reduce development time in data analytics solutions in teams both large and small. In this blog we share some tips on what makes a good data lake and how data discipline as code can prevent headaches further down the road.

Problem

Data analytics teams need to store and share data of various sizes and in a variety of formats, but problematic scenarios are common. A new team member picks up a project but cannot find the correct data set used to train an algorithm. A team return to a project later to find a dataset has been modified by a team member, but no one remembers who or why. A frontend developer cannot easily query the small subset of fields needed for a simple quick dashboard for the review meeting. The mobile developer finds that the data scientists have been using a different geospatial data standard. The director is questioning why data is stored in multiple locations requiring multiple costly enterprise licenses. Unfortunately, problems of this nature are all too familiar to many analytics teams and can dramatically reduce productivity if not remedied.

Solution

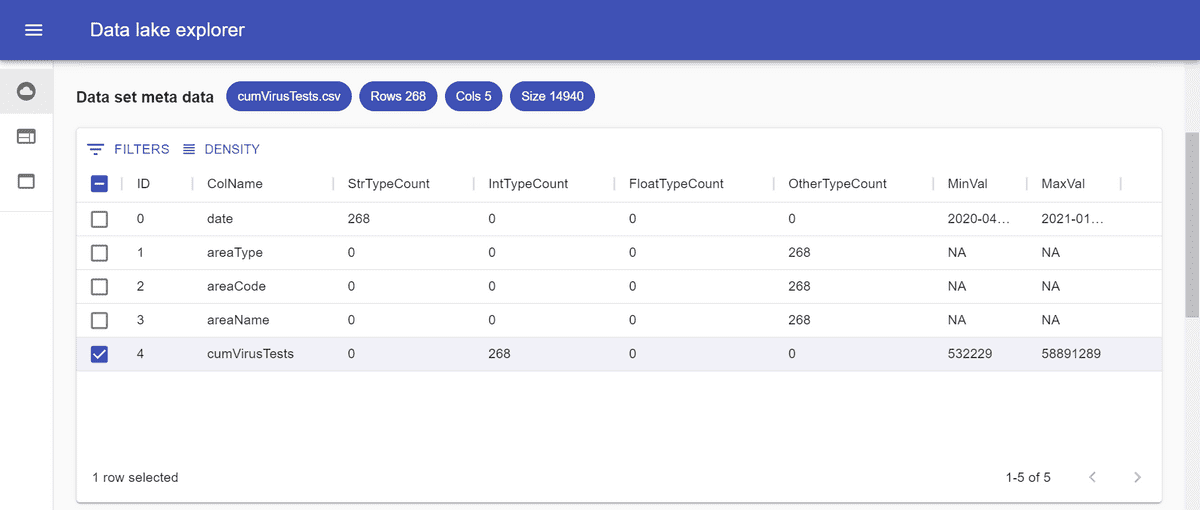

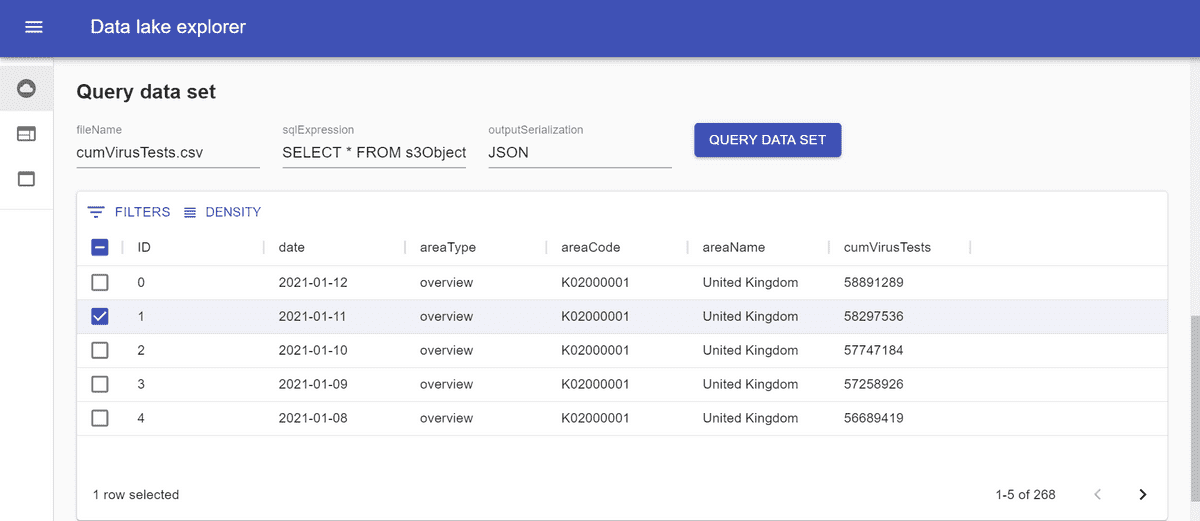

A well designed data lake can store varied data formats in a cost effective and scalable way, paving the way for big data analytics and new insights. Data lake storage policies only allow uploads from select permitted file extensions, enforcing data discipline by design and enhancing interoperability. Data lake crawlers extract meta data from files, store it in a data catalogue with sort and search functionality and record who modified the file and when, automating many of the tedious but crucial data managements tasks. Versioning enables roll back to correct mistakes. Application Programming Interfaces (API) and serverless query functionality rapidly and efficiently return only the data that is needed, when it is needed, reducing costs, and preventing apps from running slowly or consuming too many resources. Easily enabled encryption in transit and at rest, access logs, and granular identity access management controls keep data secure.

Getting Started

Opsmorph have been developing data lake design patterns written as infrastructure as code. These data lake components can be installed, customized, and integrated into customers in-house cloud environments or be embedded in the Software-as-a-Service solutions customers are developing to help fast-track application development.

“We have been perfecting our internal data lake and it now powers all our data projects. The data catalogue and API make dashboard development lightning fast so we can see what is working and what is not, drop bad ideas quickly and prioritise where to expend more energy. We’re excited to see how we can help our customers use these data lake patterns in their solutions.”

Graham Hesketh, CTO, Founding Director of Opsmorph

FAQs

What license are the data lake components available under?

The data lake components Opsmorph can help you build are provided under an MIT license meaning you are free to customize and commercialise as you choose.

What cloud providers are the data lake components available on?

The data lake components are currently available for Amazon Web Services.

What support is available for understanding how to use the data lake components?

During a development project, Opsmorph can embed our developers within your team and work with you to help you install, understand, and customize the components. Alternatively, the solution can be installed as is with instructions for use. Further reading and suggested online learning resources can also be provided.

Is the data lake component a one size fits all solution, or can it be broken up and customized?

There are many features to the data lakes and our team of developers can work with you to plan a project to build a bespoke solution that best meets your needs, whilst fast tracking the process with reusable components.